Déjà vu: Are we back to Betamax?

Predicting the rate and direction of technology adoption often seems to be as much of an art (indeed, perhaps a dark art) as it is a science. It was nearly half a century ago (!) that one of the first real format wars emerged – where Sony and JVC clashed in the VHS/Betamax battle. Decades of economic analysis have been devoted to the emergence of the war – and its outcomes. For all intents and purposes, Betamax should have been the winner: it offered greater horizontal resolution, lower noise, reduced luma/chroma crosstalk, and overall was visually the better standard. It also had a year more than VHS to penetrate and shore-up its market hold. But consumers are tricky things, and a significant range of what economists like to call ‘network externalities’ ended up holding sway on the outcome.

And even with 50 years of observing both consumer and industry behavior in relation to technology adoption, it seems that collectively we’re not necessarily any better placed to guess what factors will influence take-up. One only has to look at Zuckerberg’s quiet withdrawal away from the multi-billion dollar Metaverse, as his eyes turn to the parallel multi-billion dollar rise of ChatGPT, to see how even the biggest players can’t always read the market.

ATSC, as easy as 1,2 3. Right?

So, what does all this talk of tech-take-up rates tell us about the ATSC 3.0 saga? The standard, in development for more than a decade and approved by the FCC for rollout in 2017, still seems to languish in no-man’s land, failing to hit its true potential, always on the cusp but never in the limelight.

So, what does all this talk of tech-take-up rates tell us about the ATSC 3.0 saga? The standard, in development for more than a decade and approved by the FCC for rollout in 2017, still seems to languish in no-man’s land, failing to hit its true potential, always on the cusp but never in the limelight.

Well, as with Betamax, it’s certainly not any factors pertaining to its technological capabilities holding it back. We’ve talked about the benefits at some length in our previous blog, but to sum up its benefits in a sentence: ATSC 3.0 has the ability to bring all the advantages of IP to an entirely wireless environment – improving broadcast quality, increasing functionality, augmenting reliability and security, extending reach, and just generally improving everything – for broadcasters, for advertisers, and for audiences. Indeed, the benefits really can’t be overstated, because although there is a great focus on ATSC 3.0 as ‘Next Gen TV’, the truth is it has applications that extend well beyond just broadcast, since it in effect represents more generally a wireless IP distribution platform. Which is quite a big deal really.

So, where’s the issue? Broadcasters are champing at the bit to make use of its commercial potential. Consumer manufacturers are (mostly) supporting it. What’s the obstacle?

It is, essentially, regulatory. While the FCC was ready to endorse the standard all the way back in 2017, their approach was a tad… tepid.

In essence, in 2017, the FCC authorized –but did not instruct -television broadcasters to use ATSC 3.0 if they so wished – meaning it was a voluntary and market-driven approach to adoption. More than this, they implemented a requirement that broadcasters maintainnearly-identical broadcast in the original ATSC 1.0 standard as well. So, broadcasters could choose to move to ATSC 3.0, but only as well, not instead. While that requirement was due to ‘sunset’ this year, it appears to have been given a stay of execution: broadcasters are still having to devote dual resources to ATSC 1.0 and 3.0 outputs, despite being eager to move forward properly with the latter.

Note how much this differs from the approach taken in the transition from analog NTSC to ATSC back in 2007 – 2009, which was mandatory. Indeed, it’s almost the inverse of it – since with the NTSC/ATSC switch they actually requiredbroadcasters to stop broadcasting analog in 2009. So there’s a marked difference in approach here: in the NTSC/ATSC transition, the FCC actively pushed for the end of an old standard, and the harkening of a new one. Now, in this new era of ATSC 1.0/3.0 transition, the FCC is actively pushing the maintenance of an old standard, and crippling broadcasters in their adoption of the new one – not just in terms of operational inefficiency, but the undue occupation of huge swathes of the broadcast spectrum.

Arguably then, it has nothing to do with the technology, and nothing to do with the market – and all to do with the regulatory handcuffs that the FCC has imposed.

None-the-less, the FCC argues it is on the side of progress. In April, FCC chair Jessica Rosenworcel said: “Today, we are announcing a public-private initiative, led by the National Association of Broadcasters, to help us work through outstanding challenges faced by industry and consumers. This Future of Television initiative will gather industry, government, and public interest stakeholders to establish a roadmap for a transition to ATSC 3.0 that serves the public interest. A successful transition will provide for an orderly shift from ATSC 1.0 to ATSC 3.0 and will allow broadcasters to innovate while protecting consumers, especially those most vulnerable.”

The pressure is on

It is clear in Rosenworcel’s statement that the FCC’s motivation to push forward comes strongly from pressure being put on them by the NAB. And in turn, the NAB is feeling that pressure from below, because the market seems set to undergo another seismic shift. What do we mean by that?

Well, streaming hit the industry hard and fast, and by all accounts appeared to fundamentally change the paradigm. Every article you read in, say 2010, declared the death of Linear TV and conventional advertising. But a decade on and the streaming market looks very different; fragmented, with lower subscription numbers, greater frustration from audiences regarding commissioning and content creation strategies, a lack of transparency, and an apparent need to resort to more traditional profit models, including – surprise, surprise – advertising. (This article by Vanity Fair gives interesting insight into the ‘burst of the streaming bubble’ from a creative perspective).

What this potentially means is that Linear TV – pushed to the side lines for a little while – now has the potential to re-storm the castle. And if this siege were to be powered by ATSC 3.0 – bringing audiences all the functionality and benefits they’ve grown used to with streaming, and more – then victory could be sweet indeed.

What this means for us. And our customers.

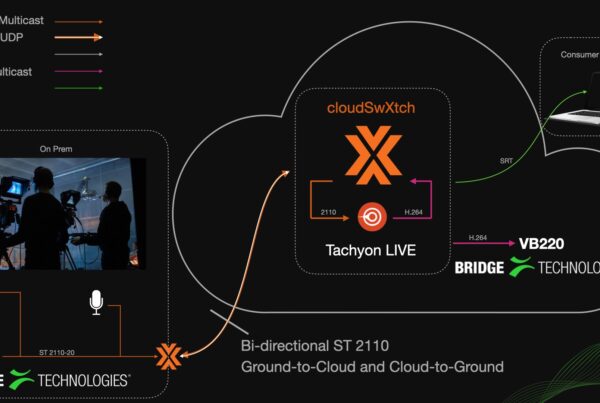

Much of the discussion in the field of ATSC 3.0 focuses on the consumer market and the incorporation of ATSC 3.0 into consumer tech. But of equal importance is the readiness of professional equipment to handle the standard. That’s where we come in. We’re strong proponents of ATSC 3.0, and as well as developing a range of equipment specifically for the standard (for instance, the SLM 1530 – our ATSC 3.0 Handheld Signal Level Meter), we’ve also future-proofed our range of demodulators, decoders, transcoders, and monitoring blades to accommodate the standard and allow broadcasters full flexibility in their operations. We’re ahead of the curve in working to introduce the important new encryption standards that come with ATSC 3.0 into professional equipment, and we will continue to support the industry – both technologically and as a vocal advocate of ATSC 3.0 – as we push forward into the future of Next Gen TV.